Antonym: The Agent Edition

ChatGPT Agent does my weekly shop. And then writes a PowerPoint presentation on how to do it.

You have seen this quote on your socials recently, a quote from author Joanaa Maciejewska:

Quite right.

We’re going to have to wait a few years for robots that can do (more) of the laundry and dishes than our machines already do. But not that long.

But screen-based drudgery will to be done away with first. Like online grocery shopping. Testing ChatGPT's new Agent feature (which will appear in most paid-for accounts over the next few days) I was delighted to find that I could put a shopping list in and have it go and do the weekly shop.

Online shopping sounds simple, but the reality most of us experience is a boring chore. You often have to compromise when something’s not available, choose from lots of similar options and all those micro-decisions add up to fatigue.

The more fluent you are in using AI tools, the more useful and interesting ChatGPT Agent will be (see the AI literacy ladder below, and the detailed explanation of how agents are different to other bots). At first I thought a weekly shop would be beyond it. It couldn’t get on to Sainsbury’s website at all, but once I’d found a supermarket website that it could navigate, it asked me to log it into my account - you temporarily “take over” the browser and slowly, hesitantly it started to figure out its way around.

The slowly bit isn’t a problem. It took 36 minutes to complete the shop, which sounds like you’re not saving time at all. But actually you don’t need to hang around and watch it. I got on with other things and checked in every now and again to make sure it hadn’t got stuck.

Manus, a Chinese agent app, can also do this - in fact it put together a complicated basket from a list of supplements and other health related supplies very quickly in my Amazon account. It just hadn’t occurred to me to give it a shopping list. Another example of AI capabilities being “discovered, rather than designed”.

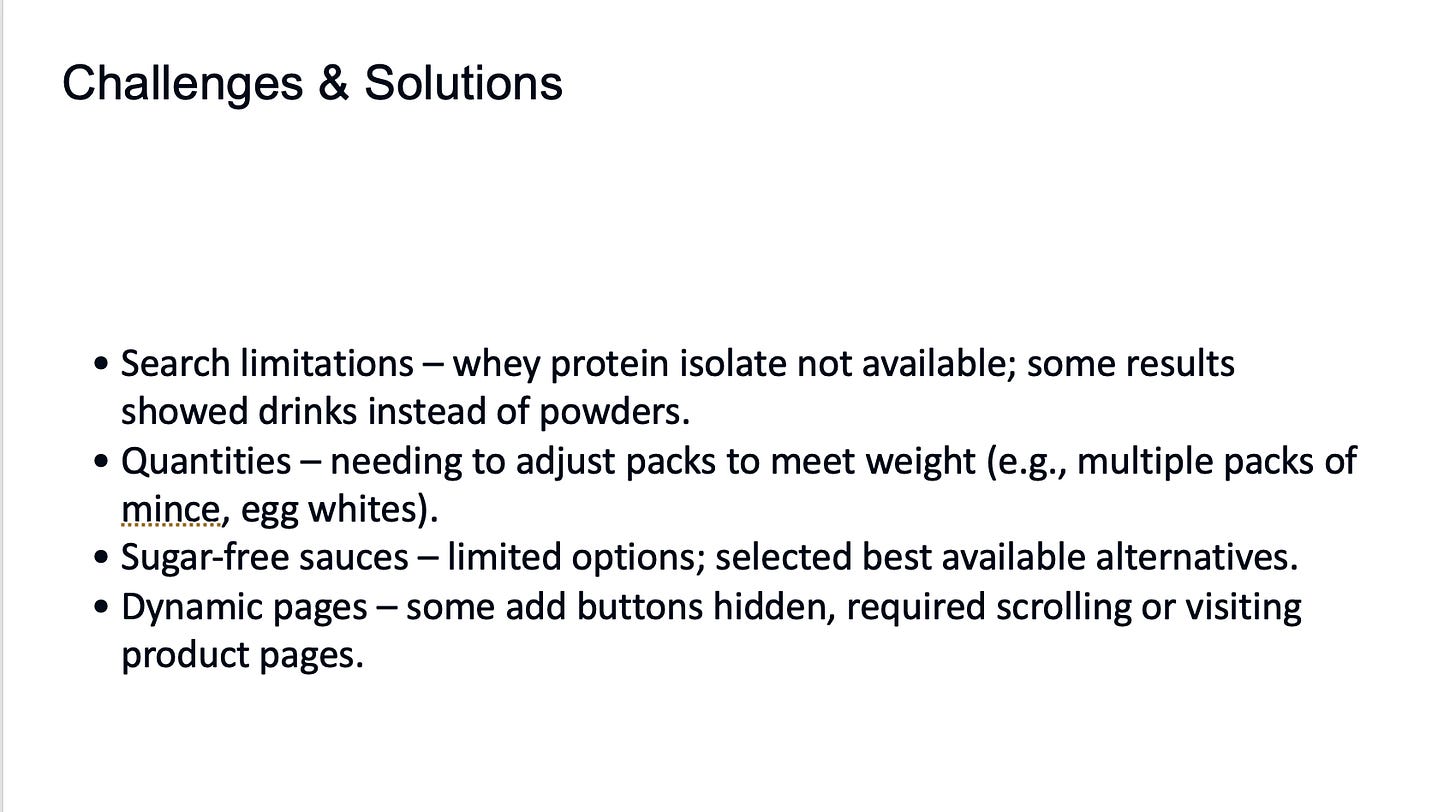

After ChatGPT Agent had finished its shop I asked it to write a PowerPoint presentation about its experience of the task. This slide shows all the times it had figure out a micro-decision:

And its final reflections on the task:

Navigating Waitrose online required patience and a systematic approach.

Search functionality was powerful but sometimes surfaced unrelated items.

Careful quantity adjustments ensured we met weight goals without over‑ordering.

Final confirmation step respects user consent before placing the order.

Cute.

Agents in action

ChatGPT does a really impressive job of some tasks – it researched and created a decent PowerPoint presentation for me – but stumbles and dithers on others. I’m not sure a general user would have stuck with it long enough to get the shopping exercise to work – but this will improve fast (because where there’s shopping there’s revenue).

I tested ChatGPT Agent on a number of real tasks, comparing it alongside Manus and Genspark, two Chinese competitors that have established a lead in this space and been rapidly adding features over the past few weeks.

Here’ s my experience with four practical test cases demonstrate the current state:

Research and presentation creation: ChatGPT Agent proved surprisingly effective. It successfully researched IP protection advice for services companies developing software products for the first time (an increasingly common scenario) and produced a comprehensive PowerPoint deck with solid strategic frameworks and current best practices. In comparison to other attempts to create slide decks in AI, the file was actually PowerPoint file which made it easier to port into other apps and sort out the design. Here’s the Gamma version which I made in a minute by uploading the file and changing it to Brilliant Noise’s brand template (the images are rubbish, but can be easily changed).

Document organisation from screenshots: When tasked with categorising dozens of NotebookLM files from a screengrab, the agent successfully created a logical filing system and framework, delivered in spreadsheet - though it couldn’t directly access Google Drive to implement the organisation.

Client research and analysis: delivered strong results comparable to specialist agent platforms. Background research that previously required hours now takes minutes, with the system producing detailed briefs on potential clients’ priorities, recent developments, and likely strategic concerns.

Shopping: All the agents take a long time to do the shopping for you and need a little help at times. Manus was fastest and smoothest, filling a grocery basket at Waitrose (I tried Sainsbury’s too, but something about the website with a shopping list and figuring out sensible approaches to little obstacles and the choice overload of online shopping.

The pattern that’s emerging is agents excel at structured research and document creation but struggle with nuanced analysis requiring domain expertise. Effectiveness correlates directly with user AI literacy — those comfortable with custom GPT creation and reasoning processes extract significantly more value.

What are agents and how are they different from AI bots?

Agents are AI systems that can plan, act, manage and use tools (and other agents). (We have a short explainer of the difference between AI agents and simpler tools in the Brilliant Noise newsletter.)

The language is shifting as fast as the technology in this area, so I offer my own working definitions below:

Understanding AI Chat Tools and Agents: A Plain Guide

There’s a growing ecosystem of AI tools out there – from chatbots to fully-fledged digital assistants. Here’s a quick guide to the key types, what they do, and how they differ.

1. Standard AI Chat Tools

These are the big-name chatbots like ChatGPT, Claude, Gemini, and Grok. You type in a question or task in everyday language, and they respond by predicting what should come next – based on patterns they’ve learned from huge amounts of data. You can talk to them about almost anything, and they sometimes generate images or code too. Think of them as very clever, well-read autocomplete systems you can have a conversation with.

2. Custom Bots

These are regular chat tools with extra instructions baked in – like telling it to “act as my marketing coach” or “mark essays using this scheme”. You’re still talking to one model, but you’ve narrowed its focus. You might see these called Custom GPTs, Claude Artifacts or Gemini Gems. They’re useful for recurring tasks or sticking to a particular tone or format.

3. Agent Apps

Agent apps go a step further – they still rely on the same base model, but now it can take extra actions like searching the web, running code or handling a few steps in a row to complete a task. It’s still just one agent working on one thing at a time, but with tools it can call on when needed. Think of it as a chatbot with a small toolkit.

4. Custom Agents

These are digital helpers built specifically for a company or purpose. Behind the scenes, they still use AI models, but now they’ve been embedded into a workflow – say managing a content calendar or handling a reporting task – with access to relevant company data, memory of previous interactions, and sometimes scheduling or logging features. They’re more tightly integrated with business systems, making them more useful – and less generic – than off-the-shelf chatbots.

5. Agentic Systems

This is where it gets more sophisticated. Instead of one agent, you have a team of them – each doing a specific job (planner, researcher, scheduler etc.) – coordinated by a managing agent that keeps them on track. Together, they can carry out complex, multi-step work like building a project plan or booking meetings and notifying people. More powerful, but also more complex to build and maintain.

Levels of AI literacy

Most discussions about AI skills focus on prompting techniques or tool familiarity. But 2025 has revealed a more structured hierarchy emerging amongst users, with clear capability gaps between levels that create compound advantages for those who advance.

We laid out a three tier AI literacy framework in Prepared Minds last year that has been useful, but nowneeds adjusting based on the experience of seeing people develop their own fluency, and because the technology has changed.

The framework now looks like this (mapping closely to the description of different agents and tools above):

Base: Unstructured user of AI tools without systematic approach

Level 1: Prompt engineer — understanding how to structure requests for consistent results

Level 2: Tool builder — creating custom GPTs, chatbots, and workflow automation

Level 3: Agent manager — directing AI systems that can use tools and execute multi-step plans.

Level 4: Agentic systems architect — designing complex, adaptive and self-improving interconnected AI workflows.

Each level unlocks an order of magnitude more capability than the previous one. The jump from Level 1 to Level 2 typically requires just weeks of deliberate practice. But people get trapped at each tier because they don’t recognise the next level exists, and those at higher levels struggle to communicate possibilities to beginners.

This creates a communication problem similar to physicists explaining quantum mechanics to novices: expertise becomes a barrier to effective teaching. It’s hard for advanced users to imagine that people don’t have building blocks of knowledge and experience that they take for granted. A Level 4 practitioner finds it nearly impossible to convey the strategic implications of agentic systems to someone still mastering prompt engineering.

The practical implication: everyone should aim for Level 2 minimum. Building custom tools provides both capability and crucial insight into AI’s actual strengths and limitations — knowledge essential for strategic decision-making regardless of role.

That’s all for this week

I hope you found something useful – and thank you for reading

Antony

Excellent. I've taken that very useful and clear explanation of the AI Literacy ladder and built an AI literacy coach GPT to improve my skills.