Antonym: The Agents & Ladders Edition

It takes a system for revolutions to stick

Update: The original edition of this newsletter included a section with data from work-in-progress research. My thanks to Tim O’Neill for asking for clarification. It’s been removed and we’ll include the final insights in a future edition.

Dear Reader

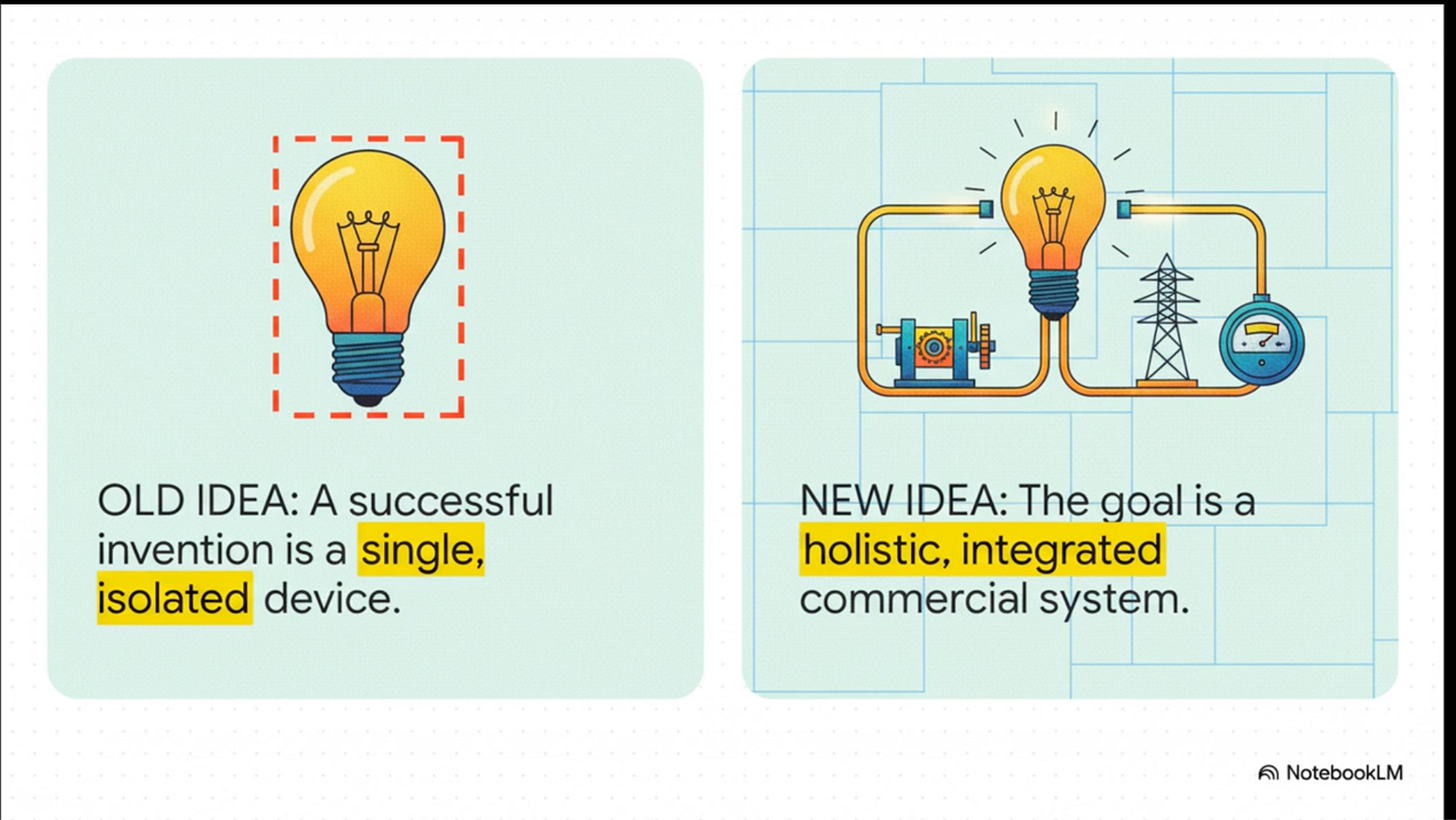

Take a look at this video. It’s about how innovations require systems to have their full effect. The lightbulb needed the electricity grid, the car needed tarmac roads and petrol stations, the computer needed the web to change everything.

There are two sections for you this week: I. a refreshed framework for thinking about AI literacy at the individual and organisational levels, and II. an underreported breakthrough in the use of AI to tell stories with video. And, no – not Sora 2.

Now let’s talk about how we get from the lightbulb that is ChatGPT to a future where intelligence is designed into systems…

I. The AI literacy ladder

It is, on this day of publication, 1054 days since ChatGPT went live and fired the starting gun for generative AI as a transformative technology. Three hundred days ago, it remained genuinely uncertain whether AI agents would materialise into something real or simply dissipate as overheated hype. The pressure cooker was intense; the outcome was unclear.

Around one hundred days ago, back in the heady days of summer, the uncertainty resolved. Agents had become tangible reality. The idea stopped being speculative and started being operational. We needed to revise our framework – yet again – for understanding what working with artificial intelligence had become.

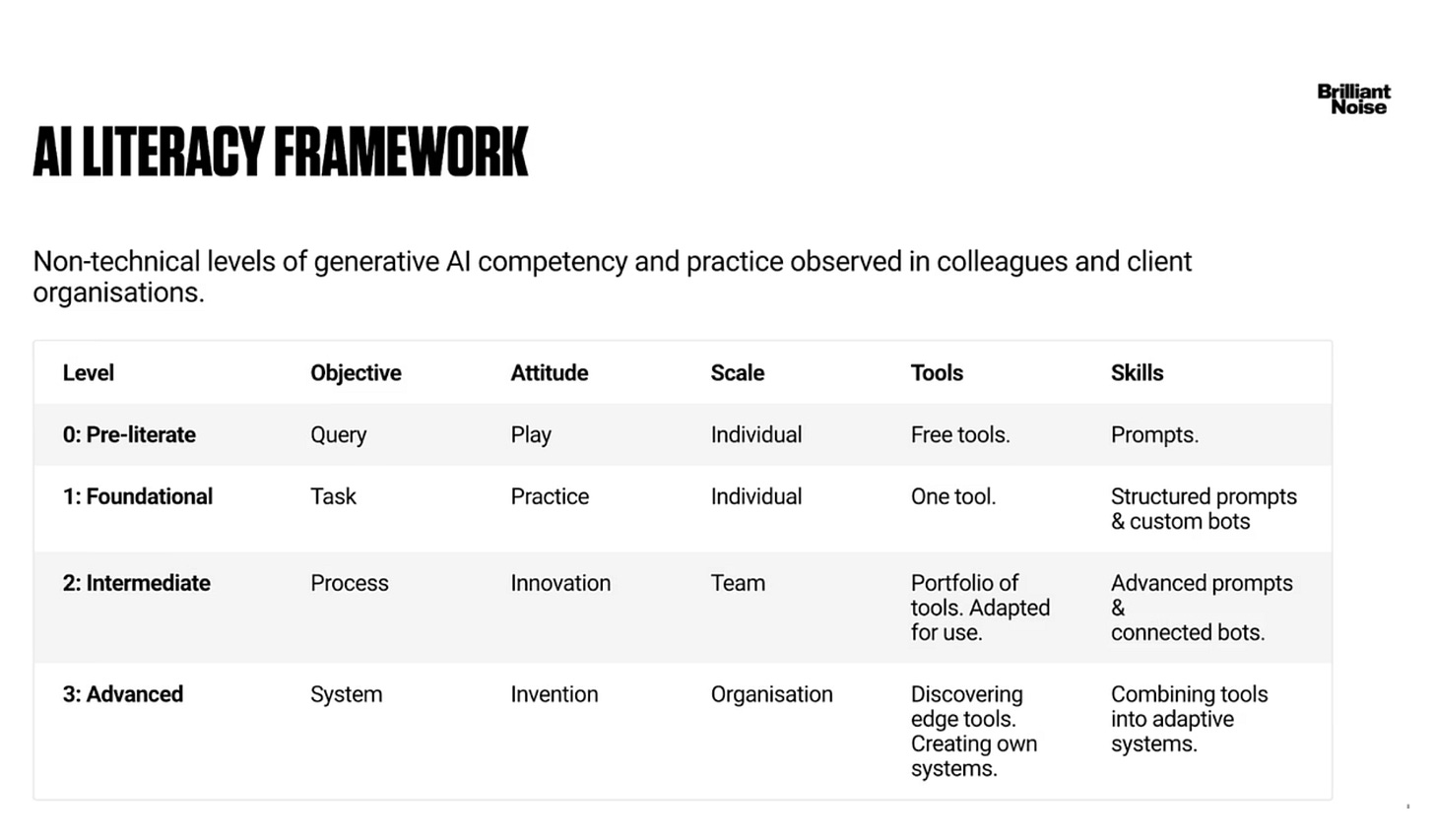

A year ago, Brilliant Noise published our paper on AI literacy titled Prepared Minds. It explained what we understood about AI skills and fluency of use from the perspective of individuals, teams, and leaders. One framework that readers found useful was our three-level model of AI literacy progression: Foundational, Competent, and Fluent. It described a progression that made sense then.

One year later, “combining tools into adaptive systems” is less conceptual – it’s clear that AI agents that coordinate autonomously, make decisions within defined parameters, and execute complex workflows without human intervention at every step.

The original three levels captured human-AI collaboration well enough. But they could not account for what happens when AI systems stop being tools we use and become agents that work alongside us, making decisions we previously reserved for humans. That distinction matters fundamentally, and it demands an updated framework.

In fact, I would argue the best shift we could make in thinking about how to work with this technology is to stop thinking separately about “artificial intelligence” and human thought and simply consider intelligence. More on that next week.

What Makes an Agent Different from a Tool?

A tool responds to commands: you ask ChatGPT to summarise a document, and it does. An agent operates with autonomy within defined boundaries: it can decide to summarise a document, identify follow-up actions, coordinate with other agents, and execute those actions without requiring approval at each step.

This may sound complex, but if you have spent time working at level one and level two literacy, it becomes straightforward. Like adding an upgrade, getting an electric motor for your bicycle, or a newer model of your car that suddenly is able to reverse park autonomously. Weird and then normal.

In a customer service setting, a tool might help a human agent draft responses more quickly. An agent system can receive a complaint, research the customer’s history, check inventory systems, coordinate with logistics agents, and resolve the issue: all while keeping humans informed of progress and escalating only when the situation genuinely requires human judgment.

In other words, a tool extends a human’s capability; an agent supplements or partially replaces part of their role within carefully designed boundaries allowing them to get on with something else.

The AI Literacy Ladder 2.0

The updated framework recognises that agent capabilities create entirely new possibilities for how organisations can operate. We’ve expanded from three levels to four, adding a “Mastery” level that captures what happens when organisations achieve genuine agentic integration at scale.

Level 1: Foundational. Individual experimentation with basic prompts and publicly available LLM tools. Users understand what AI can do but operate reactively, reaching for AI when they remember it exists. Adoption is sporadic and depends entirely on individual initiative.

Level 2: Competence. Teams adopt specific AI tools and develop shared practices around them. Critical evaluation becomes collective. This marks the shift from individual use to organisational capability, yet it remains primarily reactive. Teams use AI to augment existing workflows rather than reimagining what those workflows could become.

Level 3: Fluency. AI becomes embedded in operational workflows through agent integration. Coordinating agents make decisions and execute actions autonomously within defined scope. A customer service agent collaborates with inventory and logistics agents to resolve complex queries without requiring human sign-off at each step. The organisation begins to move at the speed agents can operate. Crucially, this is the stage where AI use connects to broader strategic objectives. Teams shift focus from executing individual tasks to managing and refining complex AI systems.

Level 4: Mastery. Organisations achieve Systems Innovation, orchestrating agent teams and building proprietary agentic networks. Research agents autonomously identify market opportunities, coordinate with product development agents, and brief executive teams with curated analysis. The organisation operates as an integrated human-agent ecosystem where capability emerges from the interaction between human judgment and agent execution. AI becomes an engine to invent entirely new approaches to value creation rather than merely a tool to optimise existing work.

The three dimensions that matter: Technology (from Prompts to Agent Networks), Scale (from Individual to Organisation), and Activity (from Using AI to Building Agentic Systems). Organisations progress simultaneously across all three, and progression isn’t linear—it’s networked.

Here’s what changes when organisations move from Competence to Fluency: the nature of human work itself transforms.

At Level 2, humans execute individual tasks augmented by AI. They write emails faster with AI assistance. They create summaries with AI support. They’re still doing the assembly work – AI just accelerates it.

At Level 3, agents manage the assembly. Humans focus on judgment. Strategy. Design. Oversight. Refinement of complex systems. This isn’t incremental improvement, it’s a category change of what humans do all day.

Consider strategic planning. At Level 2, teams manually gather data, synthesise reports, identify patterns, and present analysis to leadership. It’s labour-intensive and slow. At Level 3, agents gather the data, synthesise reports, and identify patterns autonomously. Humans receive curated analysis and make strategic judgments. The work doesn’t disappear; it transforms. Humans move from assembly to judgment.

At Level 4, this shift deepens further. AI becomes an engine for inventing entirely new approaches to value creation. Employees shift to higher-order tasks: strategy, empathy, complex problem-solving. The organisation operates fundamentally differently because the distribution of work between humans and agents has shifted entirely.

This is why Level 3 is so strategically significant. It’s the crucial stage where organisations connect AI use to broader strategic objectives. It’s where competitive advantage begins to compound.

II.

Google’s NotebookLM: From Summarisation to Strategic Storytelling

Google’s NotebookLM Video Overviews have been available for several months. But the recent integration of AI image generation, specifically their Nano Banana partnership for custom artwork, has transformed the tool from summarisation utility into something far more strategically interesting: a multimedia content platform that lets users control not just what story gets told, but how that story gets visualised and emphasised.

Video and agents

The headlines are all with Sora 2 and for good reason – but something far more interesting is happening in Google’s NotebookLM app.

New use cases:

AI vertigo was in full effect in meetings at Brilliant Noise in the latter half of the week, when NotebookLM videos suddenly got a lot more visually interesting.

Narrating a brief.

Describing a business plan.

Sharing a concept.

Previously the best use case had been as a way of having NotebookLM present your research and work-in-progress back to you during a project. It was excellent at summarising research and telling a story about your analysis from a slightly more objective perspective.

Where I might get obsessed with one argument or frame, the NotebookLM videos would show several others with equal weight, and – if I prompted the video generation to direct its presentation in a particular direction, such as a client or particular colleague.

Once again in this age of daily small wonders, I am aware that this one tool is miraculous, would have been worth weeks’ of discussion, but it is just one of many things that happened this week.

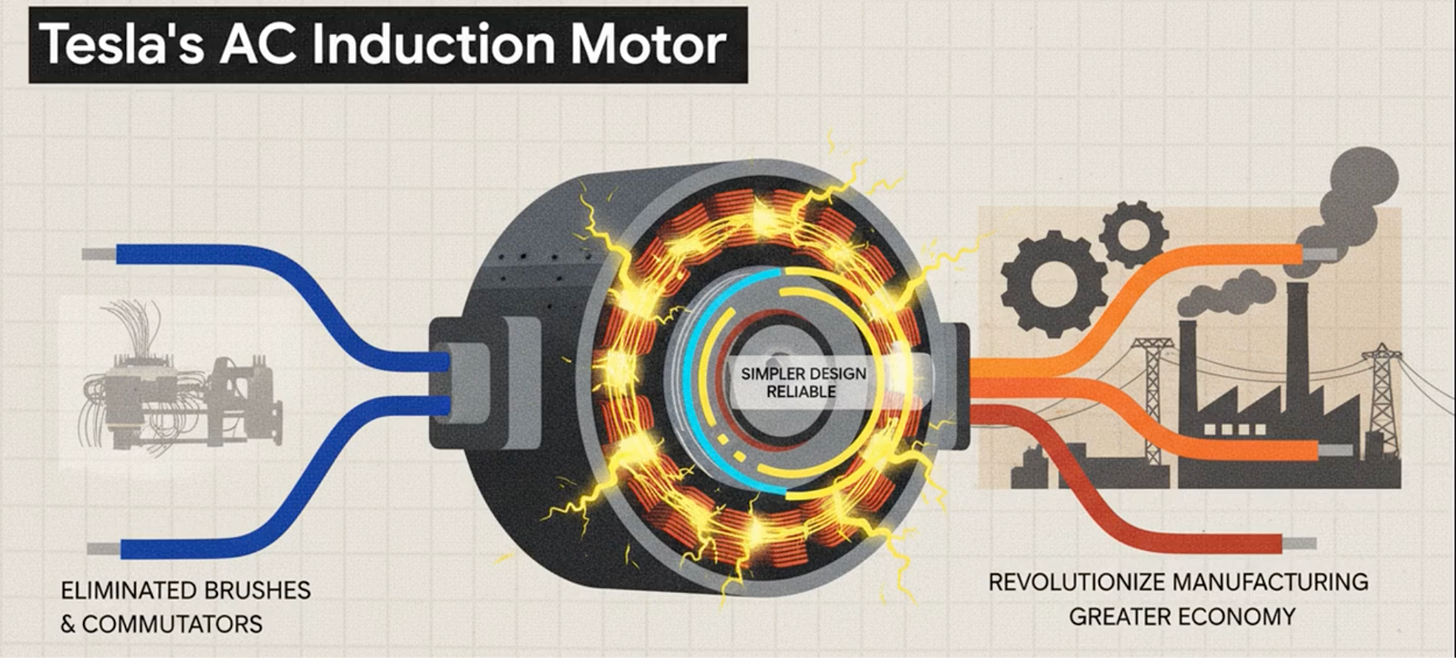

Here’s an example of a whole video which I created while researching the innovations that led to electrification. It’s about six minutes long and was generated from extensive research plus a prompt asking for the video to address what we could learn from the process of electricity becoming part of everyday life. I was impressed by the graphic design (it’s using the “retro” theme from NotebookLM)

Here are some examples of the visuals it is producing.

This image illustrates levels from the AI literacy ladder – on the left is AI tool use, and on the right a connected system, of people working with AI.

This illustration accompanies an explanation of the importance of Nikola Tesla’s induction motor.

Remember Mollick’s law: this is the worst version of AI you will ever use. Expect editability, a choice of voices, animations and interactive storytelling or co-hosting with an AI in future editions. That’s my wish list, anyway.

Of course, there are systems that need to be built. Look at this illustration from the video we opened with.

It looks like an image from a textbook. So can we generate instant textbooks on anything we want. No. Because the system of data to feed into it and quality checking of the outputs needs to be developed, and then the way that we make sure the content makes its way to learners in the right way.

This nightmare scenario is real and now:

Mollick’s law: this is the worst version of AI you will ever use. But if the technology has improvements to come - our systems around how we use AI responsibly aren’t even at literacy level one. But the everywhere-all-at-once nature of this technology means that this student is trapped in a Kafka-esque system where AI has been deployed ad hoc, but the professor is not AI literate enough to realise that they need to fact check AI outputs that they include in a textbook and texts, and the university’s authorities are not AI literate enough to spot what has happened, never mind anticipating the risk and supporting students and staff with literacy programmes.

There are versions of this nightmare being seeded around organisations everywhere because not enough people have made the fundamental shift of developing AI fluency, or even being aware of it to the point that they understand that they will need to move beyond thinking of AI as a simple tool.

That’s all for this week

Thank you for reading. Please do leave feedback, send questions, and – there are easier ways to get dopamine hits than writing a 1500 newsletter, but “Like” is still very much appreciated.

Antony

Agree on NotebookLM video update (and others such as customisable report formats and different types of audio summary). I like the fact you can provide your own guidance to tailor the output even further eg tellng your audio/video preseneters to focus on certain things or to use particulart types of analogy to explain things eg sporting analogies, etc

“High-maturity organisations (Level 3 and 4) demonstrate 7 per cent higher revenue growth and 4 per cent higher return on investment compared to those still experimenting at lower levels”

Can you pls share the source?