Antonym: The AI advice Edition

Just another trick in the wall.

Dear Reader

AI advice is mostly nonsense. Why? Because it is too early and too wild a frontier for anyone to be as certain as many seem to be.

There’s a spectrum of AI advice – at one end is the management consultant’s generic approach – consider the risks and opportunities etc. etc. – and at the other is the hucksters’ “500 AI tools you need to make you a billionaire tomorrow!”, showing on Insta, TikTok and YouTube right now.

Education is the canary.

To understand a technological revolution’s implications, watch the sectors that are affected first. In the ’00s, it was the media sector: music, news and then entertainment, in roughly that overlapping order. With generative AI, education is where the effects are felt fastest and most robustly.

ChatGPT was eagerly taken up by students under intense pressure to compete for grades at school and then take on large debts to continue their studies in higher education. The initial reaction from many educators was fear and knee-jerk bans. Things are evolving quickly for students, educators and the system as a whole.

Students have the most to gain and lose from the rise of generative AI in education. On the upside, students now have access to a personalised digital tutor and a wealth of engaging learning materials tailored to their needs. They can develop in-demand skills through virtual simulations and benefit from adaptive lesson plans. However, over-reliance on AI risks “learning loss” of core literacy and critical thinking skills that in-person education develops. Students need encouragement to use AI tools judiciously as partners in their learning, not as a crutch.

Educators face a turbulent transition as AI upends their role and workload. AI can free up time for high-value tasks like mentoring students, but it may also be used to cut costs, reducing teaching staff. Educators must proactively redefine their roles and skills for an AI-enabled classroom. They need training to make the most of new technologies while guarding against potential misuse. Easier said than done.

The education system stands to improve access and outcomes, but only if we’re deliberate about implementing AI. Personalised and lifelong learning at scale is now possible. Physical schools may transform into high-tech hubs while micro-schools and virtual boot camps rise. However, costs, privacy concerns and a narrow focus on AI to boost standardised test scores threaten these opportunities. With openness, oversight and creativity, AI can make quality education available to all learners, especially those historically underserved. But we must be willing to reinvent how and what we learn to thrive in the age of AI.

Global innovators in journalism

Meanwhile, digitally literate journalists, still reeling from the last cataclysmic disruption to their social media and search engine trade, are now the fastest to adapt and understand the potential. This week's webinar from the Reuters Institute of Journalism features Rishad Patel Splice Media on how media businesses in Asia use AI.

A couple of other points struck me: search engine optimisation will be hugely disrupted by AI, as people turn to services like ChatGPT rather than search engines and avoid the click-and-link-bait nonsense with which this marketing approach has polluted the web. This is devastating for publishers in the short term, many of whom have adapted to this way of working.

You can watch the whole webinar on YouTube or the summary (from Summarize).

Here are some key points (by AIntonym):

Most journalists (63%) are not yet using generative AI. There are concerns about manipulation and propaganda but also experimentation happening.

Some media companies in India are considering using GPT for social media images or multilingual video tools. Starting small, hiring experts and securing data are recommended approaches.

AI can help media companies reach new audiences, e.g. The Nepali Times using AI for Instagram and videos to attract youth. Identifying specific problems AI can solve is critical.

Media companies must take responsibility for AI, have clear policies and move away from monolithic views of audiences. Opportunity to accurately inform about AI.

Costs of AI are decreasing, but human gatekeepers still need to fact-check. Media companies should own mistakes, have policies, and educate and collaborate on AI.

FT’s AI policy

The FT’s editor Roula Khalaf, published a letter to readers this week about the company’s policy on AI. In case anyone has lingering doubts about AI being overhyped, Khalaf spells it out for us:

Generative AI is the most significant new technology since the advent of the internet. It is developing at breakneck speed and its applications, and implications, are still emerging. Generative AI models learn from huge amounts of published data, including books, publications, Wikipedia and social media sites, to predict the most likely next word in a sentence.

Look at the full article [£] with a subscription or free article when you register. AIntonym has summarised some of the key points here:

AI can increase productivity and free up time for reporters to focus on original content.

However, AI models may fabricate facts, create false information, and replicate existing biases.

FT journalism will continue to be reported and written by humans for accuracy and fairness.

The FT newsroom plans to experiment responsibly with AI tools for data analysis, translation, and other tasks.

AI-generated photorealistic images will not be published, but AI-augmented visuals may be used with clear disclosure.

An internal register will record newsroom experimentation with AI, including third-party provider usage.

Journalists will receive training on using generative AI for story discovery through masterclasses.

The FT remains committed to its mission and will keep readers informed on generative AI developments and its applications.

How long before your organisation feels compelled to publish its policy?

Fake it ’til you fake it.

Influencers have been pretending to be at the super-sized US music festival Coachellan with fake photoshoots and videos, but apparently their fans and sponsors don’t care.

Our impression is that the brands are in on this as well,” Danielle Wiley, founder and CEO of influencer marketing agency Sway Group, said via email. “Both brands and creators are taking advantage of the buzz and traffic generated by the Coachella name without necessarily participating in the festival itself,” she continued.

Prompt: Add confirmation of your bias about popular culture/youth / TikTok <here> to personalise your newsletter experience.

Schadenfreude pt.1 My-oh-my VICE

There was some excellent journalism that happened at VICE, I’m told. And I respect that.

I had just two encounters with VICE during its brief cheap-money-fuelled-sugar rush which burned out this week. One was at the Edinburgh TV festival. I’d been on a panel with some TV execs earlier In the day to talk about the impact of digital disruption on media. My company, Brilliant Noise, was working on capability and strategy projects for the Financial Times, The Economist and Universal Pictures around then, so our heads were very much in the media game at the time.

VICE’s founder, Shane Smith founder gave the McTaggart lecture, the event’s keynote speech. The lecture is an honour given to the great, the good and the terrifyingly powerful of the industry. Emily Maitlis gave it last year, and Dennis Potter, Greg Dyke and most of the Murdochs have had a turn at the lectern. His presence there was a provocation to some and an outright insult to many, it seemed.

Smith seemed to either be or intent on giving the impression of being under the influence of at least two of the many intoxicants he referenced during the lecture and he made it clear he gave not one fig about that. He opened with a quote from Withnail and I, a promsing start, but went downhill fast from there.

The other time was at an advertising conference in Ireland. A VICE media exec’s presentation was the keynote after the dinner on the first night. He had some slick shtick to show us. The presentation featured some LOUD and COOL videos, the playing of which allowed the speaker to pop backstage for a couple of minutes at a time, apparently to re-up his supply self-confidence, which came in a convenient powdered form if his increased zest and tempo upon his return each time was anything to go by. If you’ve ever been stuck talking to someone at a party who has indulged in non-organic coca-leaf derivatives and wished they would shut up and go away, you’ll know how it was for that half-hour bore-at-hon for the assembled senior executives.

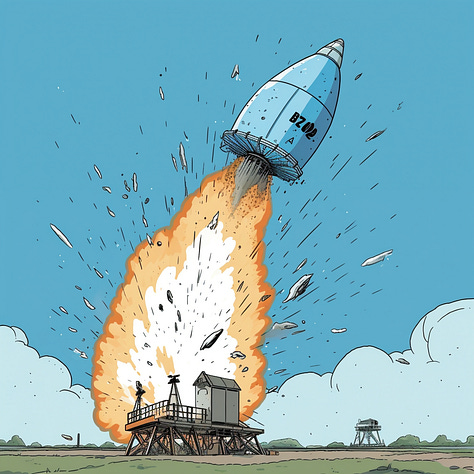

Schadenfreude pt.2: Rapid Unscheduled Failure

Elon Musk can’t win. He’s taken Twitter back to its early days (when it frequently crashed) and has made no secret of his strategy of learning through catastrophic failures, such as the “rapid unscheduled disassembly” of multi-million dollar spacecraft. Why wouldn’t his attempt to launch an extreme right-wing candidate for US president on his platform explode provide everyone with a fantastic learning opportunity?

The engineering lead for Twitter’s Growth organisation, Foad Dabiri, announced on Twitter that he is leaving the company a day after Ron DeSantis’ US presidential campaign launch on the platform experienced technical issues. More than 80% of the workforce at Twitter has been cut since Elon Musk acquired the company in October 2021. It is unclear if Dabiri’s decision to leave is related to the glitches with the DeSantis event on the platform, and he did not provide a reason for his departure.

That’s all for this week

Thank you for reading and for sharing and commenting and all those things. I hope you found something you liked.

Antony

Curious. If you think Ron DeSantis is “extreme right-wing” then what would that make Richard Spencer, Nick Griffin, et al?