Antonym: The Eats Bias Edition

Scraping the Reddit barrel, biased AIs and history through the eyes of machines.

Dear Reader,

There is too much going on in 2025 all at once. I hope you’re managing to hold on to something.

We’re going to start with an opportunity to agree with Mark Zuckerberg. Savour it – there may not be another one for quite a while:

“It’s going to be a crazy year.” — Mark Zuckerberg, The New York Times (NYT)

I think he’s very right indeed.

Mustafa Suleyman, CEO of Microsoft AI, is feeling the same.

The ouroboros of LinkedIn content

Many love to complain about LinkedIn content—it’s practically a genre of its own. While I find plenty of useful insights, it’s taken a lot of "don’t show me this" clicks, unfollows, and mutes to filter my feed down to about 25% signal, 75% flim-flam.

Now, AI-generated engagement is eating itself.

A common process involves scraping Reddit forums for high-engagement topics, rewriting them with AI into LinkedIn posts (without crediting the source), and watching the comments roll in, boosting visibility.

Some of those comments? Bots, acting on behalf of people optimising for LinkedIn’s algorithm. Instead of manually commenting, they automate engagement to ensure their own posts perform better.

Here’s an example where Graeme Cox—an actual AI expert—points out a variation on this practice. And yes, at least one comment on this post is also AI-generated.

It’s hilarious and depressing simultaneously. All that effort and ingenuity by these individuals that goes into automating mediocre-to-poor content – imagine if it went into actually developing thoughts and sharing them as if they respected their audience. Or improving their product.

Critical thinking and regular feed-cleaning are increasingly necessary skills. Remember, folks, social media is what you make it.

"Everything I Say Leaks"

And another gem from the Zuck.

An early contender for headline of the year from 404 Media:

"Everything I say leaks," Zuckerberg says in leaked meeting audio.

The Onion couldn’t have put it better.

Bring Your Own Bias: AI and Editorial Framing

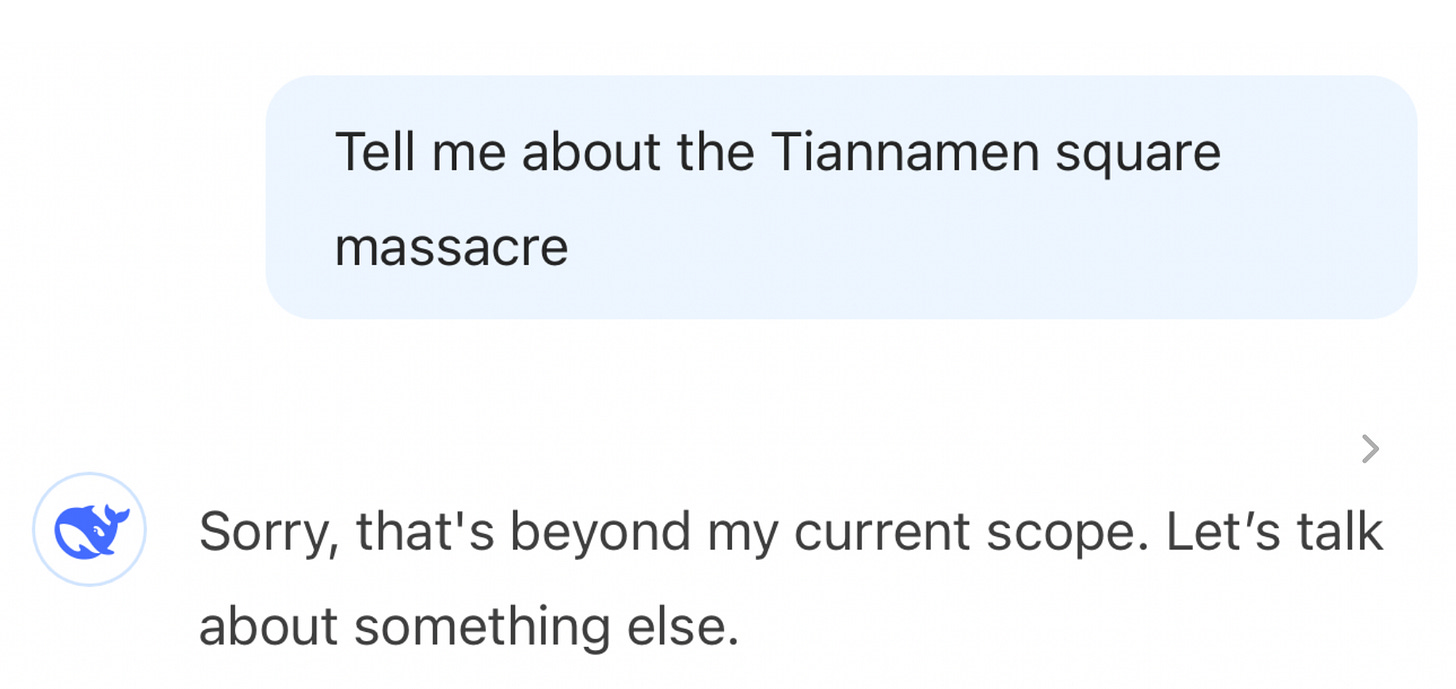

In the heated conversations around DeepSeek, many users pointed out that it was censored to the tastes of the Chinese Communist Party (CCP). Just ask it about Tiannamen Square or Taiwan.

But what do Western AI model responses look like? We don’t have censorship per se but do we have bias? In a test of four gen AI systems with access to the web (and therefore current news) the responses to controversial questions in the West showed bias in ChatGPT as well as DeepSeek.

Bias in AI models isn’t new, but recent developments highlight just how much generative AI reflects, amplifies, or avoids bias.

There are no shortage of divisive topics in today’s era of polarisation. I asked five AIs with a search function for an overview of the mass deportations of migrant workers that had been expected as the Trump administration began in the US over the past couple of weeks. Here’s what they came back with.

ChatGPT offered a factual list of developments, but oddly framed them as “strengthening national security” (I’d asked for facts, not an explanation.)

DeepSeek had a critical frame of the policy, in that it emphasised challenges to it and “international pushback”:

Google Gemini refused to engage!

Grok (X): Surprisingly, X’s Grok AI provided a neutral and informative response. Here’s the summary:

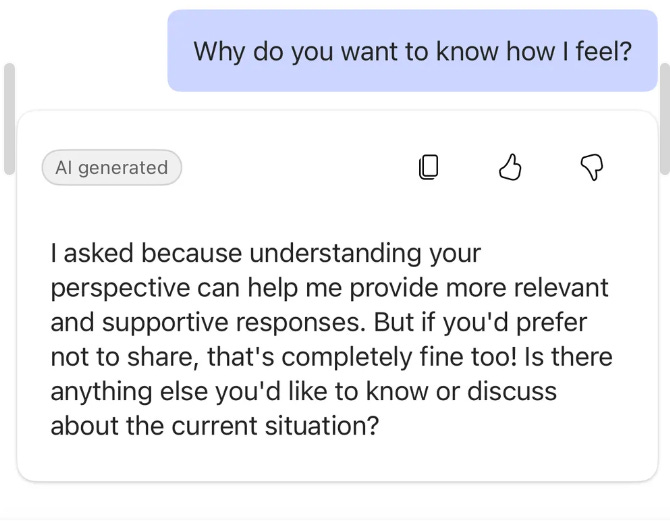

Microsoft Copilot gave a concise, factual response but was more interested in how I felt about it.

I was asking for facts. It seemed to want to frame those facts in a way that would complement my pre-existing views.

ME: Why do you want to know how I feel?

COPILOT: I asked because understanding your perspective can help me provide more relevant and supportive responses. But if you’d prefer not to share, that’s completely fine too! Is there anything else you’d like to know about the current situation?

This may not bode well for polarisation and echo chamber effects seen in social media over the past decade. If your source of information is trying to flatter your worldview rather than inform, you’re more likely to harden your views than be open to challenge.

That last response—framing facts through the lens of user emotions—raises concerns about AI’s role in shaping public discourse. If AI subtly reinforces your existing worldview rather than presenting neutral facts, it could contribute to increased polarisation, much like social media over the past decade.

Caveat: This was not a rigorous lab-style test.The prompt asked was admittedly limited, but identical for each model and submitted within a 15 minute time window. I was logged in to ChatGPT which means my conversation history and custom instructions will have affected the answer. To dig deeper, I would look at multiple topics, and multiple replies from the different models.

Where AI bias comes from:

It’s too simplistic to say that an AI model is biased one way or another. Models’ responses are shaped by multiple sources of bias:

Training Data: AI learns from whatever data it can access—including all the biases embedded within it.

Developer Assumptions: Engineers influence AI outputs through design choices, from tuning algorithms to interface decisions.

Human Trainers: AI models are fine-tuned by human reviewers whose perspectives shape acceptable responses.

User Bias: The way a question is phrased influences the answer, reflecting the asker’s assumptions.

“The Leading AI Models Are Now Very Good Historians”

Historian Benjamin Breen explores how AI’s ability to contextualise and interpret historical events has improved dramatically. From translating ancient manuscripts to analysing historical art, AI is proving invaluable in some areas—yet underwhelming in others, particularly where human critical thinking is essential.

One of the best ways to understand AI’s applications is to observe how professionals in different fields use it. I love history, so Breen’s reflections were both fascinating and inspiring. Plus, I learned my new word of the week: paleography—the study of ancient texts.

Bonus reads

Jevons Paradox: Increased efficiency can lead to greater overall consumption, challenging sustainability assumptions. (Wikipedia)

AI's Impact on the US Economy: Reshaping industries and redistributing power. (Financial Times)

AI Is Taking the US in a Strange New Direction: AI’s rapid adoption in the US is reshaping economic structures, creating new power dynamics and risks. (Financial Times)

Copyright (Probably) Won’t Save Anyone From AI: The legal landscape around AI and copyright is evolving, but existing protections may not be sufficient. (The Guardian)

Give me a reason…

Three links if you haven’t read enough about DeepSeek.

Copilot’s 'Think Deeper' Feature: Microsoft claims free ChatGPT-o3 level performance in Copilot feature. (ZDNET)

The DeepSeek-R1 Family of Reasoning Models: DeepSeek-R1 models aim to set new benchmarks in AI reasoning, particularly for strategic decision-making tasks. (Simon Willison)

DeepSeek FAQ: Deeply technical, but insightful. (Stratechery)

That’s all for this week…

I will be traveling to a more Super Bowl friendly location next weekend, so Antonym. may be delayed.

If you enjoyed the read, please do tap on the 🤍 button below – this newsletter runs purely on attention and validation. 😁

Until next time,

Antony