Antonym: The Loopy Edition

"We are not just learning about AI — we are learning how to learn with AI."

Dear Reader

This week I have added an appendix with a roundup of the latest releases from the major AI players. But this is not the whole story.

The launches are like railway lines being laid — impressive feats of engineering — but the real transformation lies elsewhere. It is the journeys now made possible, the new communities and enterprises springing up around the stations, the parts of the continent that were once remote but are about to be changed forever.

Railways changed how humanity understood time itself. In England, local time was once determined by the sun — it could differ by several minutes between Cornwall, Bristol, and London. With the spread of railways, "railway time" was introduced: time was set centrally for the first time to allow trains to run on standardised schedules. Minds had to adjust.

Similarly, the distance between Brighton and London shrank: what was once a day’s travel became a short excursion. The mental map of what could be done in a day was redrawn. What was once shocking — you went to Brighton for a day? — became novel, and then commonplace.

Today, we are once again redrawing our mental maps. But not just maps of physical space — maps of work, creativity, learning, and decision-making.

In A Thousand Brains, Jeff Hawkins explains how our minds are made of maps. Everything — ideas, assumptions, knowledge — is structured spatially in the brain. When the world changes, brains resist: updating the map takes energy. But once persuaded, the brain releases chemicals that create a flood of learning and adaptation.

At Brilliant Noise, we see this "mental map shift" again and again in AI workshops and coaching: denial becomes interest, then fascination, as people feel how the systems actually work.

A hundred years ago, "loopy" meant mentally ill. A hundred years before that, it meant crafty or deceitful.

I'd like to propose a new usage: "loopy" as the natural state of learning between humans and machines — continuous feedback, two-way adaptation, accelerating loops of improvement.

Individuals, teams, and organisations are moving through these loops as they work with AI. Broadly, they map to the Prepared Minds framework:

Task improvement: Doing old tasks better and faster.

Task reorganisation: Creating new ways of working based on AI capabilities.

System re-imagination: Rethinking business models, markets, and organisational structures entirely.

In the data-output-process framework we’ve discussed before, all of these improve much faster because The output in data-process-output loops back to improve itself each time. For example, when I was trying to develop an app with AI-powered Lovable platform I got stuck, as one often does in any workflow as you add complexity. At this point many advise simply starting again, which I did, but with an extra step – I asked Lovable’s AI to do a retrospective analysis on the project that was stalled. It did it and I added the results to the brief for a new project. The results were far better than the first time, experience and detail from the machine boosting its next attempt.

We are not just learning about AI — we are learning how to learn with AI.

Three weak signals

These are three thoughts from working with AI at the moment. Not fully formed, but worth sharing, perhaps.

1. Google Workspace as a Competitive Advantage

The recent leaps in Google Gemini performance (you can now try the 2.0 and 2.5 for free) make it a much more credible competitor to ChatGPT. They have come a long way since the risible performance of Google Bard a few years ago, or even the tongue-tied don’t-mention-the-culture-war responses Gemini 1.5 was giving a few weeks ago.

But progress is also being made on other fronts and Google Workspace (Gmail, Docs, Calendar, Drive) has added useful integrations with ChatGPT and Claude. Powerful Gen AI tools plus all of your files, email and calendar is starting to happen.

In theory we should be seeing this with Microsoft Office too, but based on our experience and clients we talk to, Google organisations are getter more from their integrations faster.

2. Markdown’s Moment

Markdown, the simple syntax for formatting text, is becoming more important. If you aren’t familiar with it, Markdown is easy to learn and may make all sorts of AI interactions just a little easier.

Many AI outputs are written in Markdown (e.g., ChatGPT, Claude).

Learning to write in Markdown makes documents more machine-readable, and structures information clearly for humans and AI alike.

It supports structured writing, better prompt engineering, and easier data transformation.

If you haven’t already, it is worth learning the basics of Markdown now. Here’s an easy intro course.

3. Actual, Usable AI Agents

After much hype and many underwhelming demos, practical AI agents have been arriving in force in the last few weeks.

For clarity, agents are AI systems that can make a plan, use tools and make decisions to complete tasks.

Apps like Manus and GenSpark are showing genuinely useful agent capabilities. Even ChatGPT is demonstrating increasingly agent-like behaviour (e.g., advanced web browsing, multi-step reasoning) – ChatGPT-o3 is now available to all and the speed and quality of its agentic (planning, tool-use, micro-decisions) seem very effective (although some reports say it is more prone to hallucination).

This is already changing how we think about task delegation, workflow design, and future work models at Brilliant Noise. I’m interested to see what will unfold in the coming weeks.

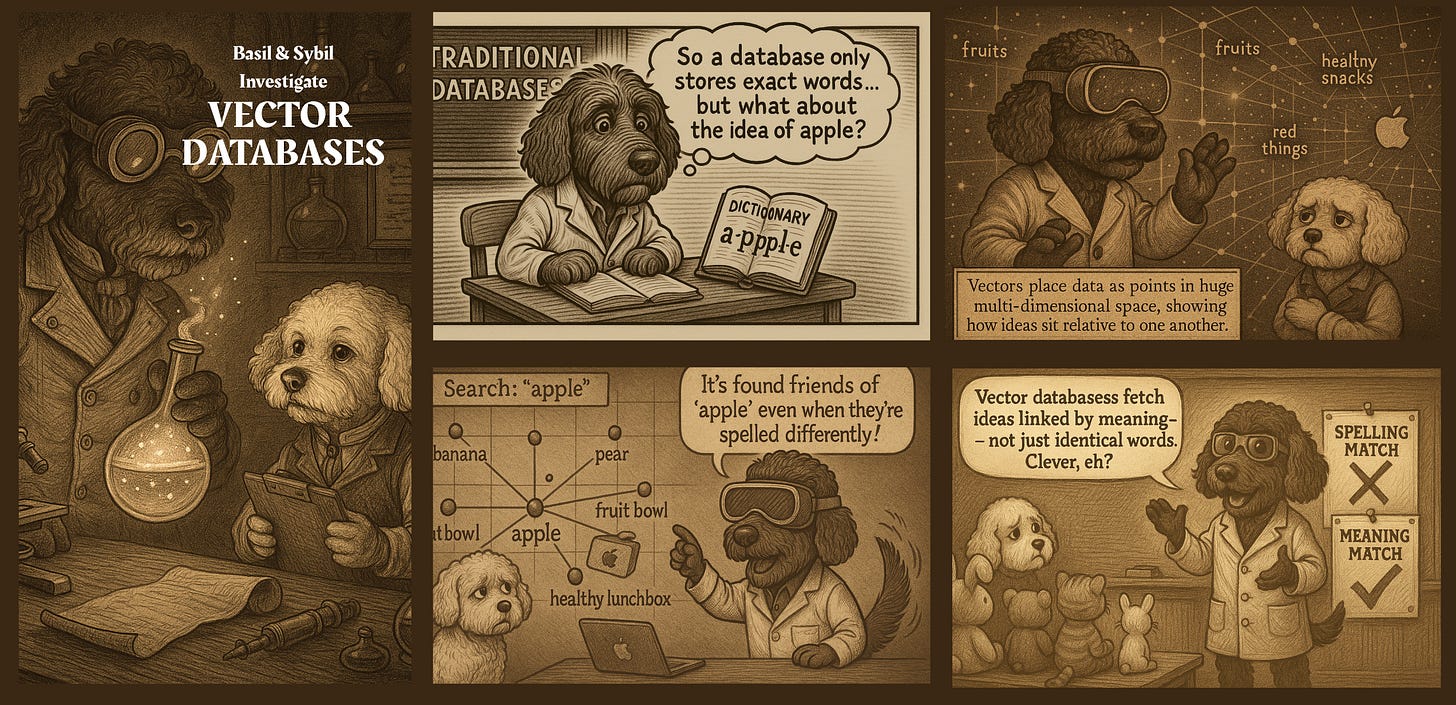

Basil & Sybil investigate

For reasons that will become clear, I was interested in explaining in simple terms the concept of vectorisation, as used in vector databases. I ended up making this cartoon about my dogs Basil and Sybil.

Making sense by making cartoons

Learning the language of AI literacy, we start to pick up useful technical words, sometimes from tech, sometimes from elsewhere. A term that was hanging about in my head without a full explanation was “vector databases” or “vectorising”.

Applying the Feynman test – finding what you don’t fully understand by writing down an explanation in plain language and then spotting where you struggle to drop the jargon – I realised I hadn’t grasped it all.

After some to-and-forth I settled on this explanation:

Vectors store the meaning or context of data (like a word, sentence, image) as directions and positions in a giant, multi-dimensional space.

Instead of just remembering the word itself (like "apple" = a string of letters), it remembers where "apple" sits in relation to lots of other ideas — e.g., fruits, red things, healthy food, brands (like Apple computers).

Because of that, when you search using a word or idea, the database can find related things, even if the exact word isn't used.

A vector database finds meaning neighbours, not just identical words.

It might suggest words, phrases, brands, objects, or ideas linked by meaning, not spelling.

It’s the multi-dimensional bit that spins me out. I tried to create visualisations of vectorising that kept a bit of the wonder about the concept in them (by developing metaphors and creative briefs then prompting image and video generators) but couldn’t get anything that was both useful and exciting.

Here’s a screenshot of some of the ideas, created with ChatGPT o3:

The sharp-eyed reader will have spotted that number two is the brief that created the GIF that is at The top of this week's newsletter. .

So I settled on the idea of explaining it in a cartoon strip. Borrowing from the approach of Tianyu Xu, whom we mentioned in a previous Antonym, I created characters from my dogs Basil and Sybil, then generated a some script ideas from my explanation selecting one in which Basil was a scientist. Taking an anything-but-Ghibli art direction, I used a faux-victorian comic strip style.

The results of trying to create a whole four panel strip was patchy in ChatGPT, so I separated out the panels – with AI always break down tasks into steps for better results – and created them separately. There are a couple of glitches in the final result, but I’m pretty happy with the outcome. The title pane was added in Canva, where I also assembled the panes into into a single strip.

That’s all for this week

I hope you found something interesting and see you next week.

Antony