The main thing I want us to be asking together is: What did we feel and where did we feel it? (All coherent intellectual work begins with a genuine reaction.)

— George Saunders, A Swim In A Pond In The Rain

Dear Reader,

Feelings and words are things that reward close attention during this AI moment we are living through. Feelings are in flux, and so is language.

For want of the right word for a thing we make do with muddle until language settles on the right ones for something new. This week we’re going to talk about what to call the things we are beginning to do with AI. Different companies are trying to get us to call different bits of AI their names. It’s not the most important thing they have to think about, but will what we call prompting end up being called “GPT-ing” or the little intelligent things we make to help us be called “Googlets”?

Fata morgana

Analysing trends and emerging patterns in an industry is often called horizon-scanning. In cybersecurity, home of the sexiest jargon in tech, people trying to figure out what hackers will come up with next talk about the “threat horizon”. The thing about fata morgana is that your perception and the facts are both warping what you see.

Fata morgana is posh for mirage or hallucination, but also describes an actual phenomenon at sea that has caused people see report seeing floating ships, fairy cities, UFOs and other cultural-context dependent visions on the horizon.

Sailors in the straits between Sicily and mainland Italy named the phenomenon after Morgan Le Fay, the dark witch or goddess in the Arthurian myths.

We’re seeing a lot of fata morgana in the shimmering heat around technology at the moment. Castles in the sky and floating ships that are glimpses of things just over the horizon. But we can misread what we are seeing. We see a shape, it seems familiar, we start to draw conclusions without all the necessary context.

Agents or Bots or Whats?

Squint and you can see new shapes emerging in the way that generative AI is being used. There are stories we keep telling about how things will be, but the truths are necessarily sui generis, without precedent. Words don’t help. Because the words we have all have other meanings.

Sometimes you can carve a new word out of an old one – car from “horseless carriage” – or modify a new word until it has taken over meaning from the old one – “digital computer” replaced “computer”, which was a job for a human, until humans didn’t do the job any more and we could drop the “digital”. These things aren’t decided, they emerge from the culture.

The first rule of communications for new technologies is: do not let geeks decide names.

One way to tell that no AI products are ready that fit the old paradigm of apps or solutions is that the tech giants are changing their names every five minutes – there are still parts of Google Worksuite that tell you that “Duet” is on offer, or “Bard”, and finally four different versions of Gemini the best one of which may or may not be called Gemini Ultra or Pro. It may or may not accept PDF files this week and may or may not be able to generate an image – but that’s another story.

Google was initially called “BackRub”, which was obviously awful and creepy. According to BusinessInsider – and who are we not to believe BusinessInsider – “Google” was chosen from a bunch of brainstormed titles because (a) it was a really big number (googol written as a number is a one followed by 100 zeroes), and (b) because the domain google.com was available when the intern misspelt “googol”.

A “Bot” in generative AI is a custom chatbot, a version of the AI that you can create with a prompt and maybe some data. It takes no coding or technical skills, but can benefit from either.

This week, Google started trying to encourage people to build generative AI apps and call them “agents”. It offered 101 AI use cases from major companies grouped by types of agents: customer, employee, creative, data, code and security. Some these are things companies will do rather than are doing, but it makes for an interesting read.

Previously, “AI agents” had been used to describe systems designed to perform tasks independently and make autonomous decisions. In its Next 24 conference publicity, Google seems to be broadening the term or at least blurring its boundaries. “Apps” isn’t accurate. “Bots” might have too many negative connotations.

I’m sticking with “bots” for now when we’re working with clients, but it is interesting to see where we will settle. I also suspect that Google’s broad use of the word agents might soften the impact of OpenAI’s forthcoming GPT5, as the ability for users to create AI agents is expected to be a key feature of the new model.

AI won’t fire you, the money will

From last week’s BN Edition, our sibling newsletter on NY Times’s coverage of Wall St waking up to the overhead-shedding potential of generative AI:

A comforting mantra for those coming to terms with AI is “AI will not replace you, but someone with AI skills will.”

Wall Street’s reaction to Gen AI is in contrast to the majority of the working world’s mood music. Generally, redundancies are a sign of defeat. They are undertaken with regret, remorse and sadness. It's a sign that the current business model isn’t working.

Also see: accenture results show rapid growth in consultancy services around generative AI

Other things worth reading…

FT: [£] UK Rethinks AI Legislation as Alarm Grows Over Potential Risks

The UK is developing laws to regulate AI, targeting large language models. This includes a requirement for safety tests and possibly sharing algorithms with the government. There are concerns about tech monopolies and the need for AI-specific regulation, despite the goal of not hindering innovation. Current laws are being adapted in the meantime.

Comment is Freed Newsltter: [£] Can AI save the NHS?

The NHS faces challenges like staff shortages and increasing demand. AI could be the key to enhancing NHS productivity. However, implementing AI is complex and politicians are underestimating the challenges, chiefly:

Data must be accurate and without bias.

AI systems must integrate.

Software needs thorough testing.

Staff must be trained.

For effective AI use, the NHS needs realistic goals, focused AI investment, and attention to healthcare needs.

The Economist: [£] Generative AI has a Clean-Energy Problem

AI's high electricity need is challenging. Data centers use 1-2% of global energy, but AI could double this by 2026. This increases U.S. energy consumption and stresses the grid.

Nuclear fusion could solve AI's energy issues, but its future is uncertain.

Rising energy and GPU costs may slow AI growth and delay clean technology adoption.

WSJ: [£] Inside Amazon’s Push to Crack Trader Joe’s—and Dominate Everything - WSJ. An excerpt from The Everything War: Amazon’s Ruthless Quest to Own the World and Remake Corporate Power, by Dana Mattioli, which is out this week.

Amazon's efforts to analyse Trader Joe's products for its Wickedly Prime brand illustrates the pressure on employees that can lead to ethically. Using internal and third-party seller data, Amazon aims to develop hit products and undercut competitors on price.

Employee Culture: Amazon's high turnover and competitive practices, such as stack ranking, drive its success.

Data Usage: Leveraging seller data, Amazon reverse-engineers popular items for its advantage.

Trader Joe's Tactics: A former Trader Joe's manager was pushed for confidential information to replicate top-selling items.

FT: [£] The Rise of the Chief AI Officer.

As AI grows, more organisations are creating a new role: chief AI officer (CAIO). This role deals with AI's opportunities and risks:

Increased needs: CAIOs improve efficiency, find new revenue, and handle ethical and security risks. They need a deep understanding of AI and related fields.

White House involvement: In the US, federal agencies now need CAIOs for accountability and oversight.

Responsibilities: CAIOs improve efficiency, find new revenue, and handle ethical and security risks. They need a deep understanding of AI and related fields.

Wonders…

NPR | Microchip graffiti is being re-discovered. Take a look at this extreme game of “Where’s Wally?” [Waldo in the US version].

Anti-gravity unlocked – a magnets and graphene combo is going to allow floating super-sensitive measurement devices.

Recommendations…

Larry David interviewed on the Smart Less podcast as Curb Your Enthusiasm comes to the end of its 25-year run. “How are you feeling about doing this podcast, Larry?” “I always regret saying yes to these things.”

This Town (BBC iPlayer). Just finished this six-episode masterpiece from the writer of Peaky Blinders about a ska band in the early-80s West Midlands. The wardrobe is as exquisite as the writing and performances from a brilliant cast.

That’s all for this week…

Thank you for reading, if you liked it stick a share on it for me would ya?

Antony

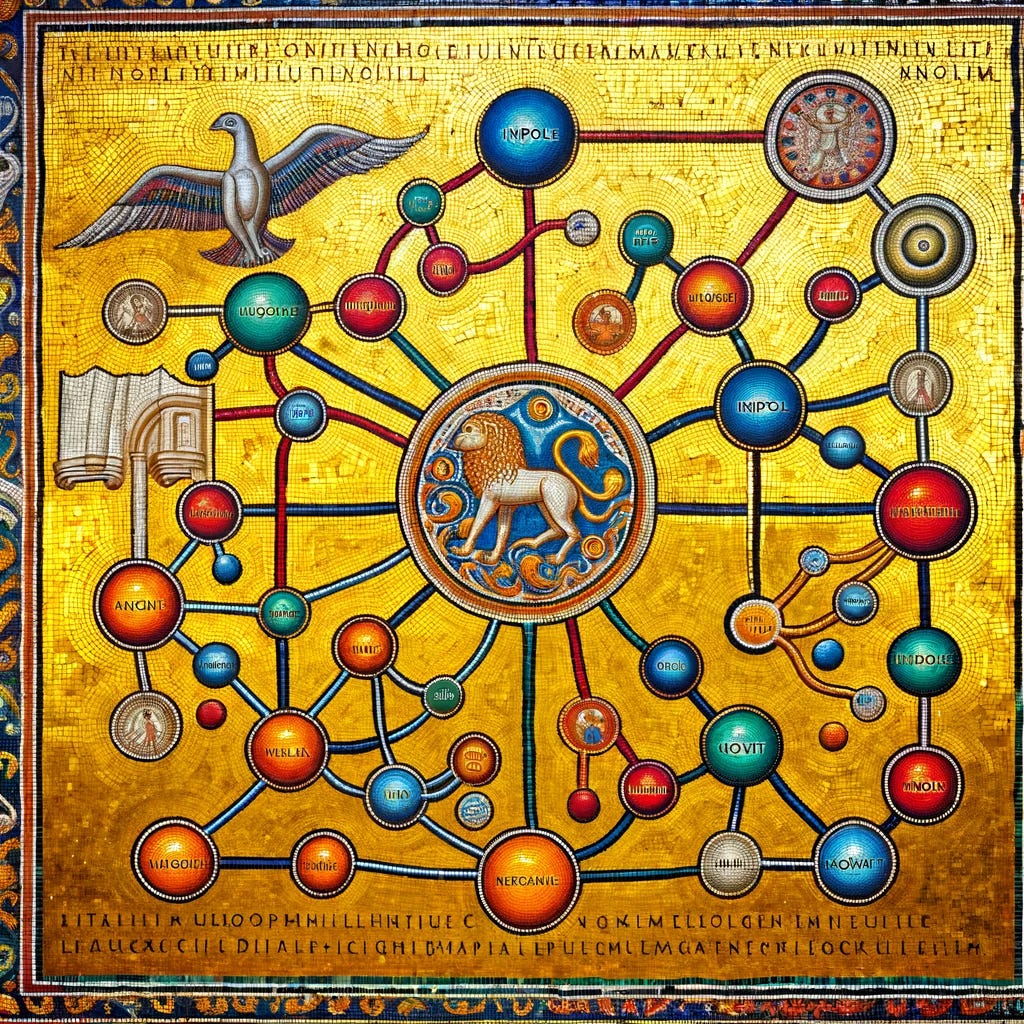

P.S. The promised explanation of the bizarre illustration this week. To resist tangents in the main email, I took this out and put it here:

It started with the fata morgana. Which was named for Morgan Le Fay from the Arthurian legends. How come Arthurian myths were so big with sailors in the straits between Italy and Sicily?

So, the image, by DALL-E 3 is a mosaic in the Norman style showing a neural network. These things always look like mystical Qabalah diagrams from the old Jewish mysticism so it’s come out rather nicely.

There’s a Norman church at the end of my road but it doesn’t have any mosaics like this, but you do find them in Sicily, which the Normans conquered in the century after they invaded England. It’s a shame because we destroyed most of the Norman art, during the Reformation and the English equivalent of ISIS, the Puritan rule of Oliver Cromwell after the English Civil War.

Here’s a mosaic from Sicily. Gorgeous. I hope I can visit there some day and see some of them in situ.